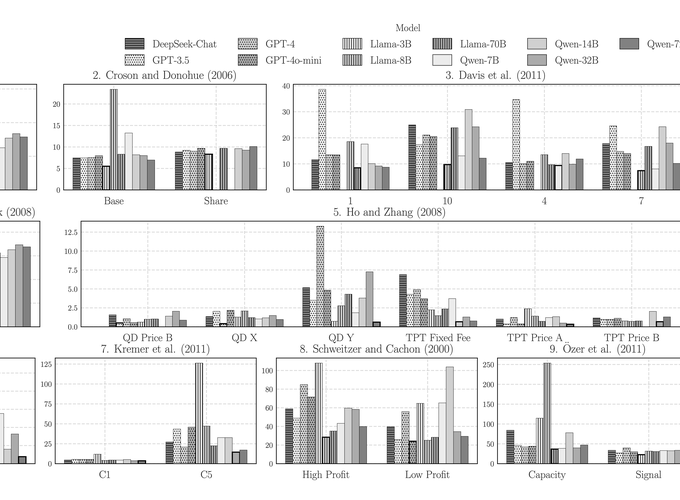

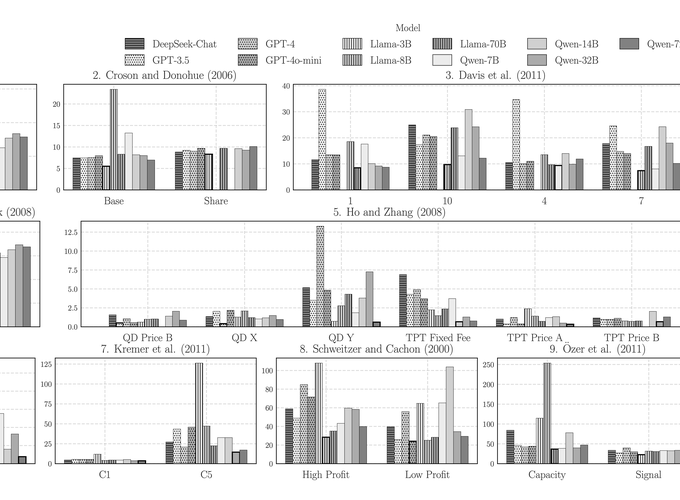

LLMs are emerging tools for simulating human behavior in business, economics, and social science, offering a lower‑cost complement to laboratory experiments, field studies, and surveys. This paper evaluates how well LLMs replicate human behavior in operations management. Using nine published experiments in behavioral operations, we assess two criteria: replication of hypothesis‑test outcomes and distributional alignment via Wasserstein distance. LLMs reproduce most hypothesis‑level effects, capturing key decision biases, but their response distributions diverge from human data, including for strong commercial models. We also test two lightweight strategies—Chain‑of‑Thought prompting and hyperparameter tuning—which reduce misalignment and can sometimes let smaller or open‑source models match or surpass larger systems.