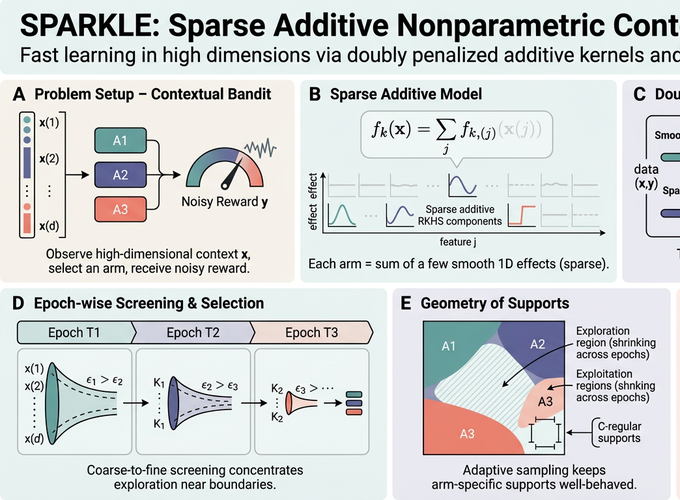

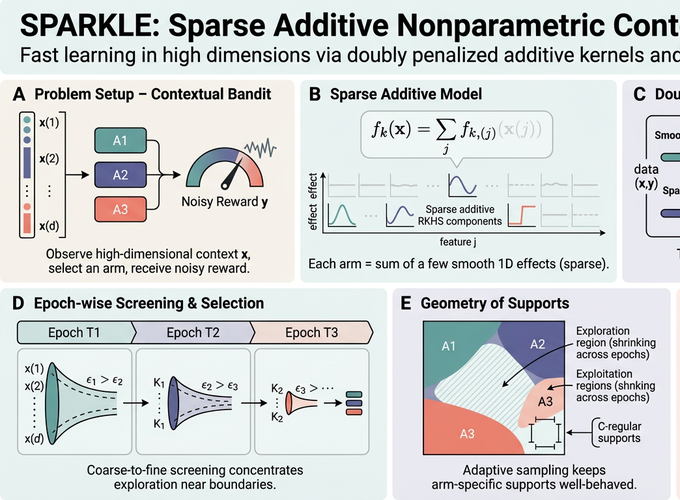

Personalized services are central to today’s digital economy, and their sequential decisions are often modeled as contextual bandits. Modern applications pose two main challenges: high-dimensional covariates and the need for nonparametric models to capture complex reward-covariate relationships. We propose SPARKLE, a novel contextual bandit algorithm based on a sparse additive reward model that addresses both challenges through (i) a doubly penalized estimator for nonparametric reward estimation and (ii) an epoch-based design with adaptive screening to balance exploration and exploitation. We prove a sublinear regret bound that grows only logarithmically in the covariate dimensionality; to our knowledge, this is the first such result for nonparametric contextual bandits with high-dimensional covariates. We also derive an information-theoretic lower bound, and the gap to the upper bound vanishes as the reward smoothness increases. Extensive experiments on synthetic data and real data from video recommendation and personalized medicine show strong performance in high-dimensional settings.